---

title: " High Performance Networking with EC2 Virtual Private Clouds "

date: 2017-08-11

slug: high-performance-networking-with-ec2

url: /blog/2017/08/High-Performance-Networking-With-Ec2

author: >

Juergen Brendel (Pani Networks)

Chris Marino (Pani Networks)

---

One of the most popular platforms for running Kubernetes is Amazon Web Services’ Elastic Compute Cloud (AWS EC2). With more than a decade of experience delivering IaaS, and expanding over time to include a rich set of services with easy to consume APIs, EC2 has captured developer mindshare and loyalty worldwide.

When it comes to networking, however, EC2 has some limits that hinder performance and make deploying Kubernetes clusters to production unnecessarily complex. The preview release of Romana v2.0, a network and security automation solution for Cloud Native applications, includes features that address some well known network issues when running Kubernetes in EC2.

## Traditional VPC Networking Performance Roadblocks

A Kubernetes pod network is separate from an Amazon Virtual Private Cloud (VPC) instance network; consequently, off-instance pod traffic needs a route to the destination pods. Fortunately, VPCs support setting these routes. When building a cluster network with the [kubenet](/docs/concepts/cluster-administration/network-plugins/#kubenet) plugin, whenever new nodes are added, the AWS cloud provider will automatically add a VPC route to the pods running on that node.

Using kubenet to set routes provides native VPC network performance and visibility. However, since kubenet does not support more advanced network functions like network policy for pod traffic isolation, many users choose to run a Container Network Interface (CNI) provider on the back end.

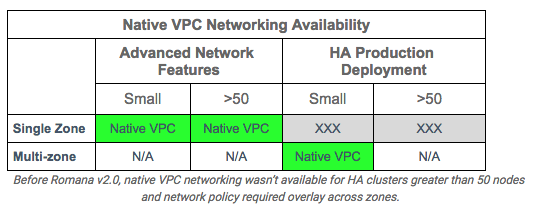

Before Romana v2.0, all CNI network providers required an overlay when used across Availability Zones (AZs), leaving CNI users who want to deploy HA clusters unable to get the performance of native VPC networking.

Even users who don’t need advanced networking encounter restriction, since the VPC route tables support a maximum of 50 entries, which limits the size of a cluster to 50 nodes (or less, if some VPC routes are needed for other purposes). Until Romana v2.0, users also needed to run an overlay network to get around this limit.

Whether you were interested in advanced networking for traffic isolation or running large production HA clusters (or both), you were unable to get the performance and visibility of native VPC networking.