to generate transcriptions from audio (using the Whisper model) and to create

embeddings for text data, as well as to generate responses to user queries

(using GPT and chat completions). For more details, see

[openai.com](https://openai.com/product).

### Whisper

Whisper is an automatic speech recognition system developed by OpenAI, designed

to transcribe spoken language into text. In this application, Whisper is used to

transcribe the audio from YouTube videos into text, enabling further processing

and analysis of the video content. For more details, see [Introducing Whisper](https://openai.com/research/whisper).

### Embeddings

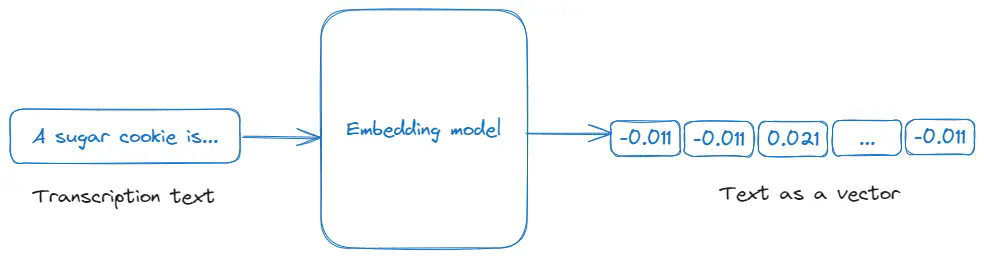

Embeddings are numerical representations of text or other data types, which

capture their meaning in a way that can be processed by machine learning

algorithms. In this application, embeddings are used to convert video

transcriptions into a vector format that can be queried and analyzed for

relevance to user input, facilitating efficient search and response generation

in the application. For more details, see OpenAI's

[Embeddings](https://platform.openai.com/docs/guides/embeddings) documentation.